[Update 23/04/2015 – A recording of this webinar as well as the slides are now available online.]

Next Tuesday (14 April) I’ll be presenting a webinar entitled “Beyond Matched Pairs: Applying Matsy to predict new optimisation strategies”. The webinar will be hosted by our collaborators at Optibrium who have incorporated the Matsy algorithm into StarDrop (see earlier post). Here’s the abstract:

Join our webinar with Noel O’Boyle of NextMove Software to learn how matched series analysis can predict new chemical substitutions that are most likely to improve target activity for your projects.

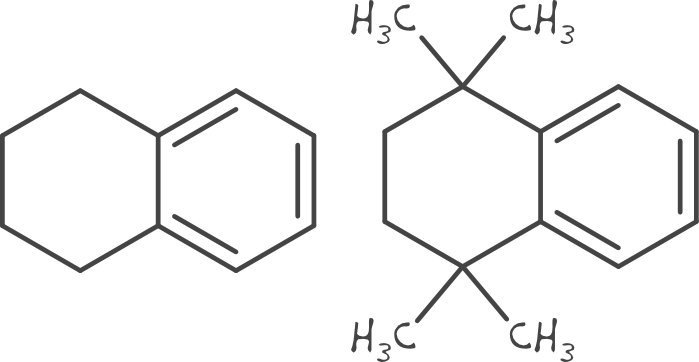

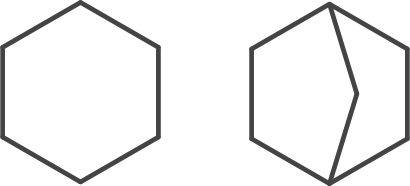

The Matsy™ algorithm for matched molecular series analysis grew out of a collaboration with computational chemists at AstraZeneca with the goal of supporting lead optimisation projects. Specifically, it was designed to answer the question, “What compound should I make next?”.

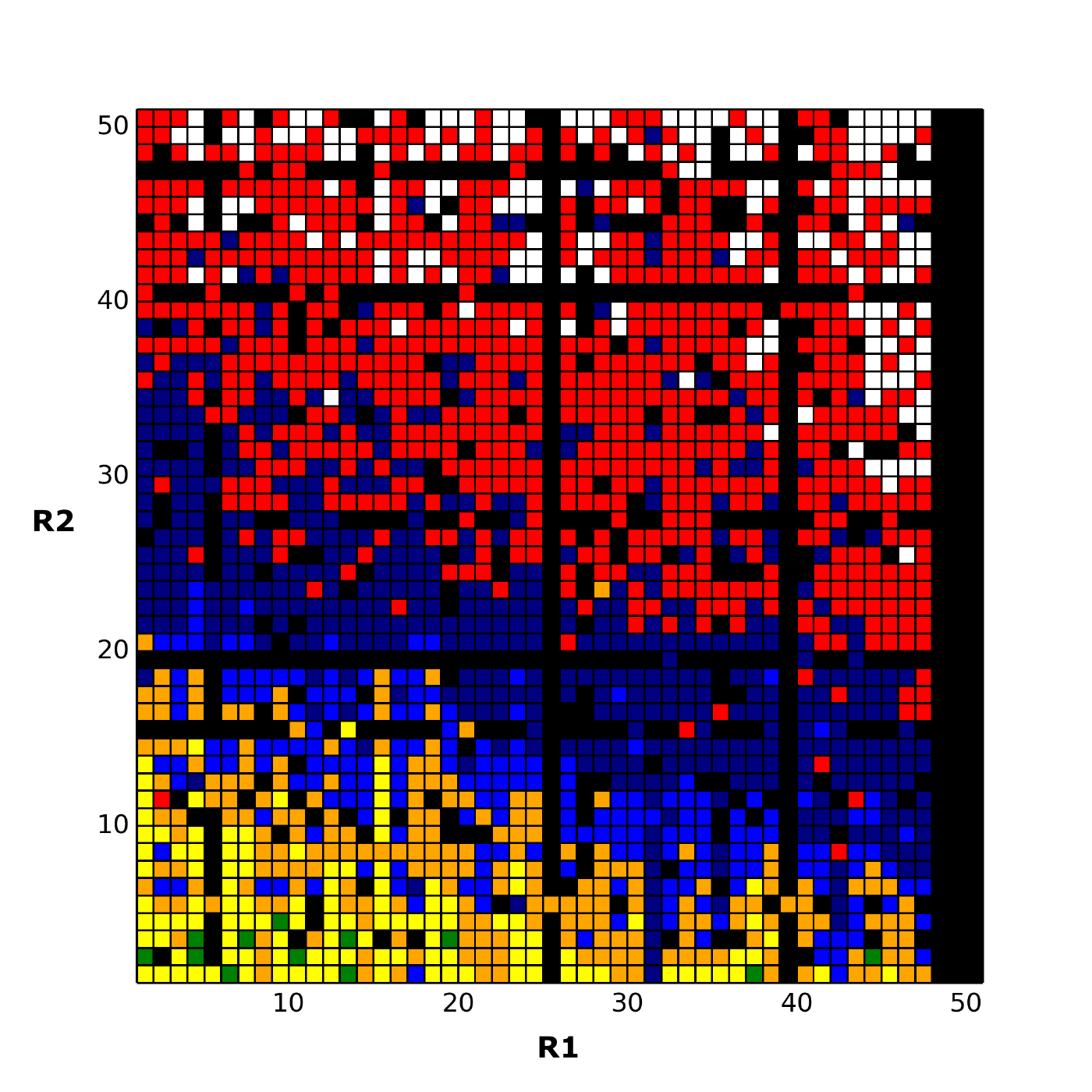

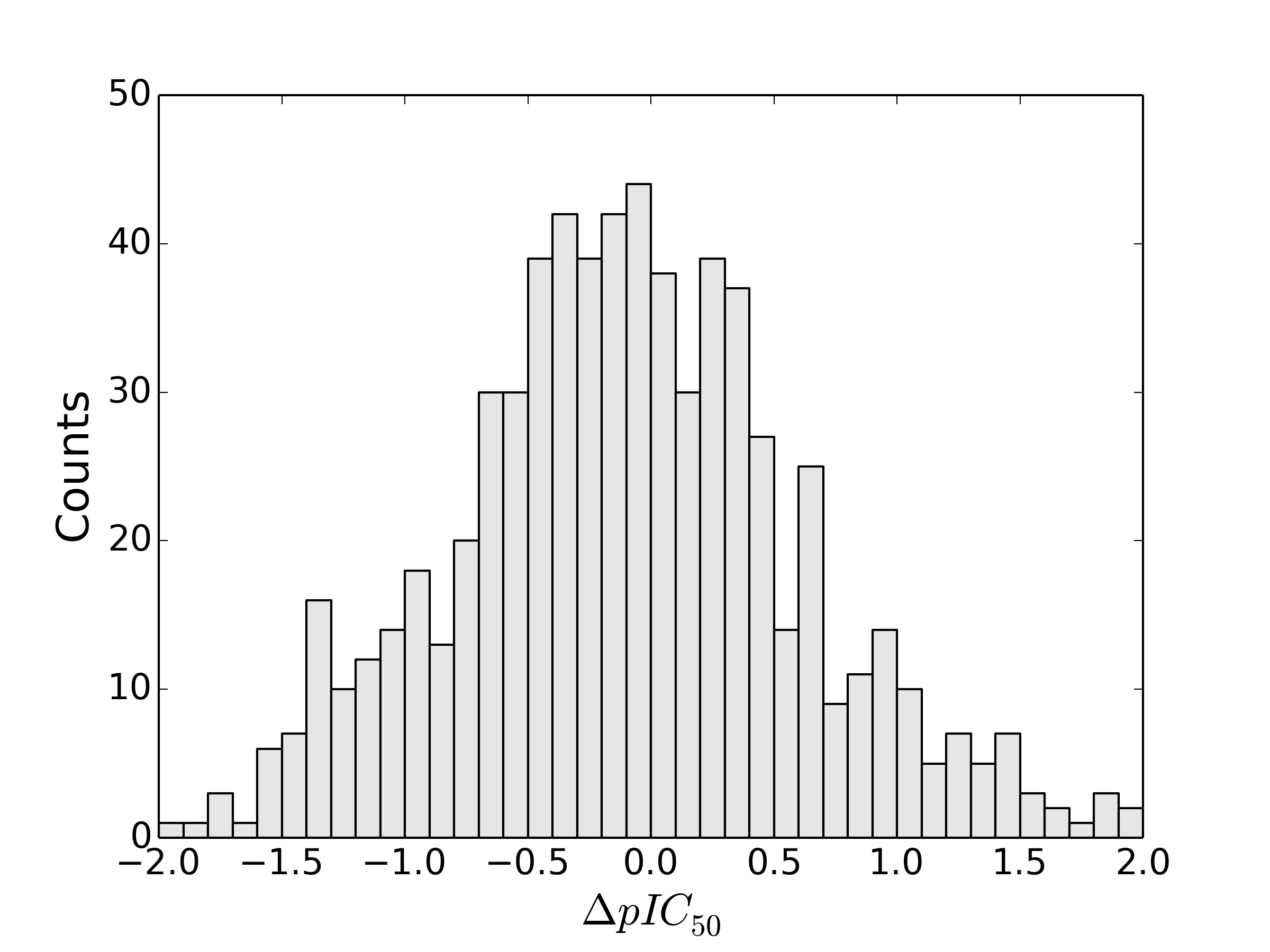

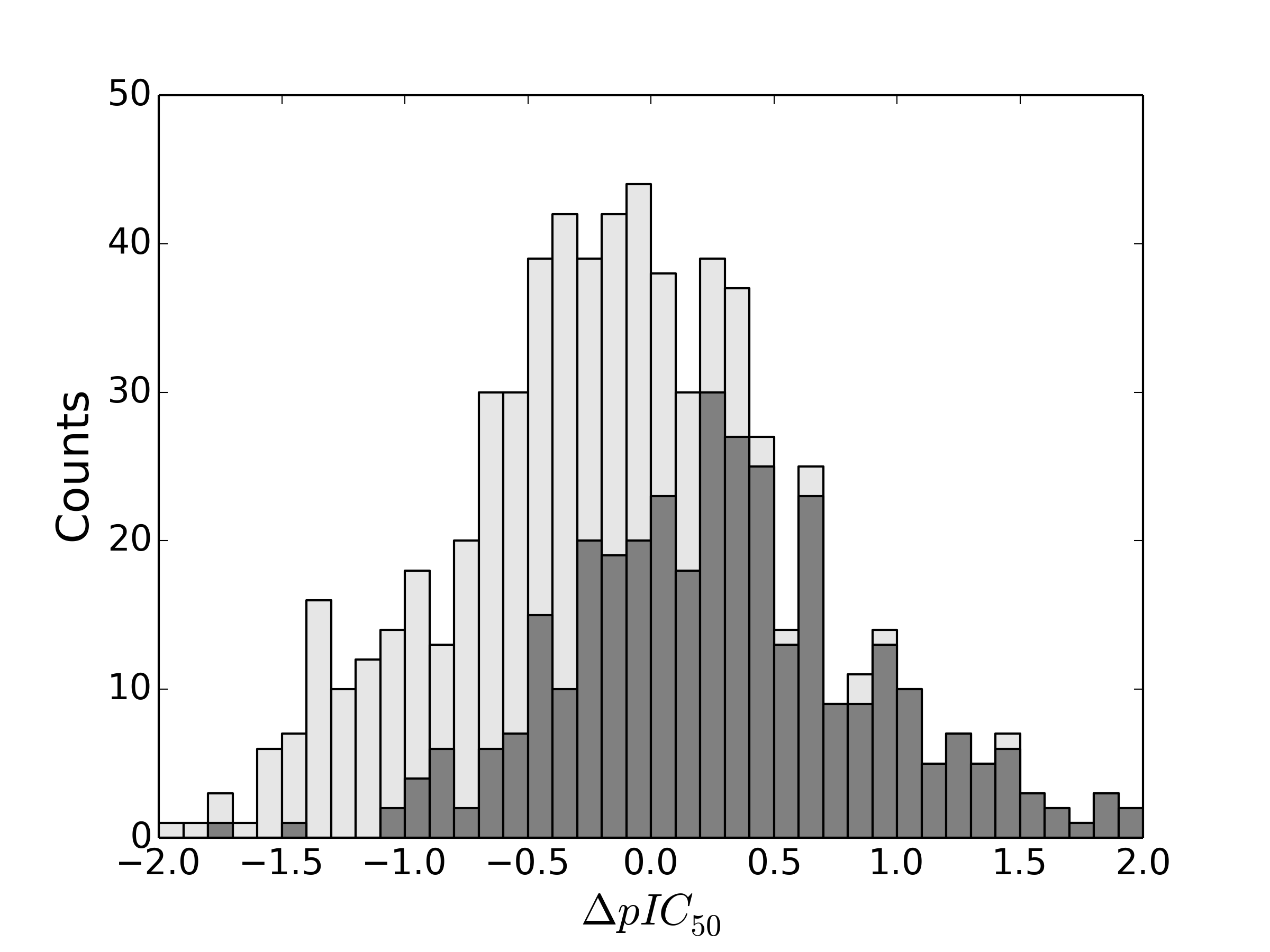

Matsy has been developed to generate and search in-house or public domain databases of matched molecular series to identify chemical substitutions that are most likely to improve target activity (J. Med. Chem., 2014, 57(6), pp 2704–2713). This goes beyond conventional ‘matched molecular pair analysis’ by using data from longer series of matched compounds (and not just pairs) to make more relevant predictions for a particular chemical series of interest. In addition, all predictions are backed by experimental results which can be viewed and assessed by the medicinal chemist when considering the predictions.

Matsy is applied in StarDrop’s Nova™ module, which automatically generates new compound structures to stimulate the search for optimisation strategies related to initial hit or lead compounds. StarDrop’s unique capabilities for multi-parameter optimisation and predictive modelling will enable efficient prioritisation of the resulting ideas to identify high quality compounds with the best chance of success.

I’ll be focusing on the science behind the algorithm itself rather than the specifics of its integration into StarDrop, so even if you’re not currently an Optibrium customer you may find this of interest.

To register, click on the image above or just here.